Sandra Peter and Kai Riemer

Generative AI and creative work

This week: how generative Artificial Intelligence and synthetic media changes the business of creative work.

Sandra Peter (Sydney Business Insights) and Kai Riemer (Digital Futures Research Group) meet once a week to put their own spin on news that is impacting the future of business in The Future, This Week.

The stories this week

09:01 – How synthetic media will transform business

Other stories we bring up

Renewables comprised of 68.7% of grid power across the Australian grid on November 29 2022

Temu became the most downloaded shopping app in the United States

Our 2019 discussion on The Future, This Week with Barney Tan on #ChinaTech and the rise of TikTok

Our previous discussions with Kishi Pan on Xiaohongshu (Little RED Book) and shopping holidays in China

Employee access to Twitter’s content moderation tools limited after the Musk acquisition

Musk tells advertisers he wants Twitter to be the digital town square

The AI-generated Elon Musk explainer

Our previous discussion with Kellie Nuttall from Deloitte on AI fluency in Australian organisations

Foundational models are changing how AIs are trained

Our episode of The Unlearn Project on automation making jobs harder

How AI-generated art is transforming creative work

AI restoration of 19th century portraits

AI colourised video of 1930s London

Our previous discussion with Genevieve Bell on why data is not the new oil

Creative Fabrica launches generative AI tool

Shutterstock will sell AI-generated art and try to compensate human artists

Our previous conversations on The Future, This Week around GPT-3 and AI essay writing, the implications of using public data in AI models, how AI is changing the movie industry and weird new jobs of the future

Mike Seymour’s insight on AI art creation and the role of the artist

Georgina Macneil’s insight on where AI-generated art fits within art history

Follow the show on Apple Podcasts, Spotify, Overcast, Google Podcasts, Pocket Casts or wherever you get your podcasts. You can follow Sydney Business Insights on Flipboard, LinkedIn, Twitter and WeChat to keep updated with our latest insights.

Send us your news ideas to sbi@sydney.edu.au.

Music by Cinephonix.

Dr Sandra Peter is the Director of Sydney Executive Plus and Associate Professor at the University of Sydney Business School. Her research and practice focuses on engaging with the future in productive ways, and the impact of emerging technologies on business and society.

Kai Riemer is Professor of Information Technology and Organisation, and Director of Sydney Executive Plus at the University of Sydney Business School. Kai's research interest is in Disruptive Technologies, Enterprise Social Media, Virtual Work, Collaborative Technologies and the Philosophy of Technology.

Share

We believe in open and honest access to knowledge. We use a Creative Commons Attribution NoDerivatives licence for our articles and podcasts, so you can republish them for free, online or in print.

Transcript

Disclaimer We'd like to advise that the following program may contain real news, occasional philosophy, and ideas that may offend some listeners.

Kai It's time to talk about AI.

Sandra Again.

Kai This time, generative AI.

Sandra AI has entered creative work.

Kai It's changing the work of designers, of artists, of anyone really who works with media or with graphics design.

Sandra Some of our listeners might have heard over the last few months about DALL-E, about Midjourney, about Stable Diffusion. It's time now to finally unpack it.

Kai Let's do it.

Intro From The University of Sydney Business School, this is Sydney Business Insights, an initiative that explores the future of business. And you're listening to The Future, This Week, where Sandra Peter and Kai Riemer sit down every week to rethink trends in technology and business.

Sandra Before we talk AI, there were a couple of other news items that we must bring up today, one of them like a really good news item, right?

Kai Last Friday, the Australian electricity grid set a new record for the proportion of electricity generated by renewables.

Sandra Woohoo! A good record, right?

Kai A good record for once, yes. 68.7% of electricity in Australia was generated by renewables at 12:30pm, so midday on the 28th of October, and that's a new record. In some states like Western Australia, it went up all the way to about 80%.

Sandra That's pretty cool.

Kai That is pretty cool. It shows that renewables are having an actual impact, only 29% in that period came from coal. Obviously, that's a midday measurement, batteries are being rolled out across the country as well that will allow the grid to also draw more on renewables during you know, the darker parts of the 24-hour period. But most of it came from rooftop solar, which shows that all that solar on people's roofs is having an impact on changing the energy mix.

Sandra That's pretty good news. There's also been other kind of obscure news.

Kai Yeah yours is weird, isn't it?

Sandra It is quite weird. I feel this is like the very early TikTok moment where, you know, quite a few years ago now, we heard about TikTok and we were like, 'what is this app? And what is it for?'

Kai Yeah, like karaoke and kids. Musical.ly, TikTok. That was a weird moment. And now it's big.

Sandra Now it's big, and it's everywhere. And it's just, you know, it's TikTok.

Kai But this is shopping.

Sandra This is an obscure shopping app that has become, over the last week, the most downloaded app in the US. The app's called Temu, I think that's how you pronounce it, because no one seems to know. It's an e-commerce app that's gone up to the top of the charts in the US. So it's the most downloaded shopping app in the US. That means that it had to beat Amazon, it's had to beat Walmart, and even SHEIN, the other Chinese competitor, it's a Chinese app.

Kai To be fair, those apps are on many phones already. But it is significant, and it's making a steep rise.

Sandra A very, very steep rise. It's been around for a very long time in China. It's a global version of the Chinese e-commerce company and app Pin Duo Duo, which is huge, they've beat Alibaba in China. And they're one of the big Chinese companies now attempting to replicate the success that companies like Douyin have had with TikTok in the US entering a different market, SHEIN has been the same. But just to give it context, this app has managed to get into China and beat Alibaba that was the established competitor. And it's now got over 730 million active users every month.

Kai About twice as many users as the US has people.

Sandra Every month, that is active users. And it's known for extremely, extremely dirt-cheap prices and all sorts of other gimmicks that they do to keep people on the platform.

Kai I feel we should talk about this. Remember we did Little Red Book?

Sandra We did TikTok first, then we did Xiaohongshu, we did Little Red Book with Kishi. It's time I think to have another one of those you've never heard of this and it's huge.

Kai Okay, we should do that in one of our next episodes. But I also have, of course, it's a Musk.

Sandra It's a Musk. Yes, it had to come up.

Kai So chief twit Elon has seized the main seat at the big blue bird.

Sandra Now it looks like it's the only seat.

Kai Yes, he has that's basically taken out all the other chairs from the boardroom and has made himself the only Director and Chief Executive of the company. And he's making changes, he is firing people.

Sandra As he came in, right, he fired the company's CEO, CFO.

Kai Anyone with a C in front of it are all gone.

Sandra Pretty much, yeah.

Kai But also he's spilling a lot of other people, reportedly in the content moderation part of the company, and other parts. And he's looking for ways to charge, because he paid a hefty markup on Twitter in a market environment where lots of tech companies have gone down in value. He has to recover some of that cost, apparently.

Sandra And most of the money Twitter makes nowadays is from advertising. So it looks like they're going to try to monetise other parts of Twitter, one of them could be the blue tick.

Kai Yeah, the verified user tick for which he wants to start charging a monthly fee, not quite clear where it will land. That's one of those ideas. And I'm sure there'll be other subscription tiers, different models that they'll experiment with. But there's also, of course, this whole discussion around what will it mean for what the platform looks like? What discourse on the platform is like, and we're not quite clear where this is moving, because he's been sending mixed signals.

Sandra Yes on the one hand, he's been known as a free speech, absolutist anything should go, you should be allowed to say anything you want, and everyone should be allowed to be on the platform. On the other hand, he now seems to be signalling that there needs to be some sort of content moderation, but seemingly not the type of content moderation that Twitter is doing now.

Kai Yeah, it's not quite clear where he lands, but he has moderated his stance on content moderation. He says that it's "important for the future of civilization to have a common digital town square, where a wide range of beliefs can be debated in a healthy manner, without resorting to violence". Probably acknowledging that if anything goes, and the trolling and inciting violence and everything is allowed, it will crowd out certain views and people might leave the platform, so remains to be seen how big the changes around content moderation will actually be. Also, there's the European Union and quite harsh legislation.

Sandra But in the meantime, Elon Musk has also appeared in a deepfake ad.

Kai Yeah, as if he wasn't all over the media already.

Sandra The deepfake ad, which is actually an explainer for a new type of startup investment platform doesn't actually have permission from Elon Musk to use his face. So it uses a deepfake version of him, which, by the way, looks quite good. We'll include the link in the shownotes.

Kai It has him in the bathtub, though.

Sandra Yes. It's a spoof on the Wolf of Wall Street.

Kai It is a bit disturbing. Yes.

Sandra And the video has robust disclaimer saying that, you know, this is satire, this is parody. But still, Elon Musk had nothing to do with the making of it. And the company has received active interest from over 20,000 people from 80 countries in their products and services.

Kai Yeah, genius move, include Elon Musk without his permission, media picks it up, gives you extra publicity.

Sandra It's not the only one right there is a number of celebrities who are faced with people using deepfake versions of them in what is clearly parody or satire, but nonetheless, kind of associating their clout with the products or services that they're spruiking, there was another one featuring Tom Cruise and Leonardo DiCaprio.

Kai It's almost as if the story takes us directly to the main topic of today, which is how synthetic media will transform the world of business. That's one of them, not exactly the one we're going to talk about. But this AI generated synthetic media is now everywhere. The diffusion of these technologies is really democratising the creation of what's now called synthetic media or synthetic content.

Sandra That means every one of us and every one of our listeners could go online now and use one of these AI platforms to generate an image of pretty much anything in the style of anything that they can think of, a photo drawing, a Van Gogh, anything that they want, plant, animal, mineral.

Kai And the story today comes from Computerworld. And it really talks about the various kinds of synthetic media that there are, and then homes in on the kinds of technologies that are making the most headlines at the moment, which is DALL-E, Stable Diffusion or Midjourney, what is now commonly known as generative AI, which also includes text-based systems such as GPT-3.

Sandra And we'll include a few links in the shownotes because we've touched especially on text generators and a few other ones of these technologies quite a few times on The Future, This Week. But today, we're talking about, as you mentioned, things like DALL-E or Stable Diffusion or Midjourney, which over the last few months have really allowed us to create hyper-realistic images of pretty much just anything we want, just by typing text into a text box. So I would say, 'I want to see a poodle riding a surfboard in front of the Sydney Opera House'.

Kai And lo and behold, you'll get a few versions of that, not everything looks hyper-realistic, but things are getting pretty close.

Sandra And that's what we call synthetic media.

Kai Yeah, synthetic media or synthetic content seems to become the catch-all phrase that includes the imagery that is produced by, by this generative AI, but also things like digital humans, deepfakes, content that goes into the metaverse, 3D versions of content. So it's an umbrella term. But at the very heart of it is what we call generative AI, those big what we also call foundational models, the systems trained on either large amounts of texts from the internet, as in GPT-3, or large databases of images that are scraped from all over the internet and public sources that underpin those systems like DALL-E.

Sandra It could be anything, right? It could be text that it's trained on, it could be images, it could be audio to generate synthetic audio...

Kai Voices, yeah, sound.

Sandra It could be video to generate synthetic video. But the pace at which these have taken off is tremendous. So if you take just one of them, let's say DALL-E, which is open for everyone to use, and it only costs a couple of cents to generate an image. DALL-E, for example, has more than 1.5 million users generating images every single day to the tune of about 2 million of these images being generated every single day. And that's only one platform.

Kai Which all feedback on to the internet.

Sandra More cat pictures.

Kai But we want to talk about how these technologies already start changing various business practices in the creative industries, and what these technologies might become, we can see glimpses of that. We're at the beginning of what might well be quite a disruptive event.

Sandra And that is a disruptive event for the types of jobs and tasks that people do a disruptive event for well-established business models, not only on the creative arts, but internet business models, and also quite a disruptive moment for how we think about what art is.

Kai Let's start by pointing out that it does challenge in some fundamental way, the narrative around automation. I mean, we've put in the shownotes our Unlearn Project episode, where we showed that the idea that AI takes away the menial work, will leave us with better work, is not quite true, sometimes it makes our work harder. But it also doesn't seem to be true that AI only takes away the, you know, the stupid, menial tasks and humans are left with all the glorious creative work because now these systems actually go to the very heart of creative design work.

Sandra But here's where some people might go, 'oh well, you have to be very creative to think of a poodle on a surfboard in front of the Sydney Opera House'. So let's have a quick look at what people actually do with this, and not as a fun pastime thing before they start recording a podcast, but as part of their work or their creative process.

Kai For instance, Kevin Roose, in a recent New York Times article spoke to quite a few different people in the creative industries, about what they do with DALL-E and these kinds of technologies. And they spoke to a game designer, for example, who uses DALL-E to illustrate each new release in his online game. He would previously have to, you know, source images from a freelancer, which he says was not only costly for what is quite a niche game, but also would take him quite a bit of time in reviewing artists renditions, and he's now able to come up with really good solutions in a matter of minutes.

Sandra They've also changed how people do restoration, we'll include a link in the shownotes to how people have used AI tech to bring 19th century portraits back to life, adding colour, and then movement and emotions to the portraits. There's also a wonderful example of a movie showing a day in London in the 1930s that has been upgraded and coloured to better reflect what the day might have looked like in the 1930s.

Kai The New York Times also spoke to Patrick Claire, who's a filmmaker here in Sydney, he's worked on projects like Westworld. And he pointed out that oftentimes he needs to pitch ideas to a film studio, so he needs to have visual elements to showcase what he has in mind. And he would spend a lot of time going through image databases like Shutterstock to find the right image. And now he can use DALL-E to put a prompt in and iterate and find a picture that comes much closer to what he wants to actually show, than what you would normally find in databases of pre-existing pictures, where the technology now has a place in his creative workflow.

Sandra And even here on the SBI team, we've used images and creations by DALL-E and by Stable Diffusion to add artwork for some of the posts that we put up on our website. Steven Sommer has created a few of these works using various software to find a perfect match to what we were trying to illustrate, things like Data is not the new oil.

Megan And my birthday card.

Kai Yeah, and we also created a birthday card for Megan with DALL-E. But the point is really that you can illustrate abstract concepts by playing around with prompts. And you can do things fairly easily that would otherwise take a lot of work either in computer imaging or otherwise photoshoots. You can have dogs play poker; you can have animals surfing.

Sandra Things that might be not only impossible to make, or might be just very, very costly to access.

Kai Yeah. And now you can do this in a very easy and straightforward way. And it's not just fully coloured things, you can use this as a sketch tool. There's a service designer quoted in the same article, she creates very simple black and white drawings to illustrate, say process models or handbooks and things like that, improving the workflow. She and other people say that they might not necessarily at this stage include DALL-E renditions in their final works, but in the creative process of iterating forward to what the final work might look like, it has become a really useful tool. you

Sandra You could argue here that one might still need an eye for design and for the concept that goes into this. And in some cases, that is true. But often the design eye comes from you using a very particular style that might rely on someone else, either an artist or another designer, having thought deeply about these elements and where you place things on the canvas, and then importing that style just to add the design to the concept that you want to achieve. And increasingly, in programs like DALL-E and Stable Diffusion, there are many prompts that help along with that process, either by auto completing the prompt that you want to design or by using GPT-3 to actually add to what you're doing.

Kai There's also websites now where you can actually get ideas for what prompts would look like or copy and paste the prompt and then just customise it.

Sandra So a lot of scaffolding, a lot of help, and a lot of drawing on previous creative work.

Kai So let's talk about what this means for various actors in this industry. And the first one would be designers of various kinds.

Sandra For people who do art for instance, for covers of magazines or of books, on the one hand, it's a tool that they can employ to help them do the work and do the work more easily or generate more concept art for a client. But it can also mean losing clients who now choose to do it themselves. Because the platform enables them to do this at almost zero cost and with very little skill.

Kai There's already reports of designers who say that clients have jumped ship and using generative AI to do what they would normally charge money for. And even artists that charge a lot for prime artwork would engage in bread and butter, or more commercial design work, that is now threatened by the use of the systems.

Sandra Even we've used samples of this work, rather than going to a designer and say, 'Hey, can you do some concept work around this', we've used the software to try to generate some early prototypes to help our thinking along.

Kai But it also has implications for the places designers would previously have sourced images from such as Shutterstock, or similar image databases.

Sandra These are online marketplaces where you can type in the type of imagery that you want, you know, 'man standing in front of the University of Sydney building smiling, whilst wearing a dark jacket'.

Kai And then trying to find someone having taken a picture like that, uploaded it, and now selling it for royalty payments. So the Computerworld article is fairly explicit about the way in which these platforms might be disrupted. And we can already see that some are embracing the new technology. A TechCrunch article reports on a platform called Creative Fabrica, who give access for a monthly fee to millions of stickers, and artwork, and clipart, and all kinds of things that designers can use in their work. They are now allowing users to use their own generative AI engine to create images and then sell them on the platform.

Sandra And it's not clear where this will end up. Many companies are struggling with what stance to take. We've seen for instance, companies like Shutterstock, first banning all AI generated art from their website, and actively going after and removing any images generated by models such as DALL-E or Midjourney or Stable Diffusion. But now changing their stance and deciding for instance, that they're going to allow images created by DALL-E on their platform and they will try to find and ways to compensate the human artists on whose work the AI model has drawn to be able to generate these images.

Kai And so these platforms find themselves really at a crossroads where it's not quite clear where the industry will end up. Because you could argue that, you know, why would I want to licence or buy clipart that someone created with DALL-E, why not just use DALL-E to create what I want the clip out to look like? So I think there's a real soul searching going on with these platforms, and the question about where their niche will be, there's certainly a redefinition of what access to imagery and artwork will look like.

Sandra It's also not quite clear where artists will come down in this new world, not only in terms of compensation and what they might get from people using their...

Kai Their style, really.

Sandra Their style to begin with. But some artists have already expressed...

Kai Frustration, anger...

Sandra Frustration, anger at the fact that, you know, they might have spent years or decades of their life developing a particular style. And we're not talking about you know, that artists like Van Gogh, we're talking about current artists who are now seeing their work used to generate new images, and any compensation they might get is very, very small. This also raises questions about what type of work gets included in the training data sets that these models use.

Kai So just a reminder, these foundational models are built by taking in millions of billions of pictures that are often sourced from the internet, many of which represent copyrighted works, artworks, all kinds of imagery, to the extent that when someone puts a prompt to these generative AI systems, they can say, 'I want something in the style of Van Gogh, or Rembrandt' or popular contemporary artists who are still alive and who now find themselves in a weird world where they are trying to sell their original artworks. Whereas someone can now create images that look like they were painted in the same style.

Sandra So the way forward needs to include figuring out how to do consensual data collection. So only the works of people who have agreed to have their work, be used to train AI models should be included, and find ways to genuinely compensate the people whose work is being used as inspiration.

Kai None of which will be easy, because on the one hand DALL-E, for example, doesn't open up its training data set, while Stable Diffusion has made it searchable. So artists can actually see if their work were included, which opens the door for compensation for maybe opting out of having data included. So I think there's a lot of catching up to do with legislation and how we think about business models there.

Sandra But that shouldn't take away from the fact that this is an enormously creative moment for entertainment, for media for advertising, and really, for democratising that space for everyone.

Kai It's also a time suck. It's so much fun playing around with these systems.

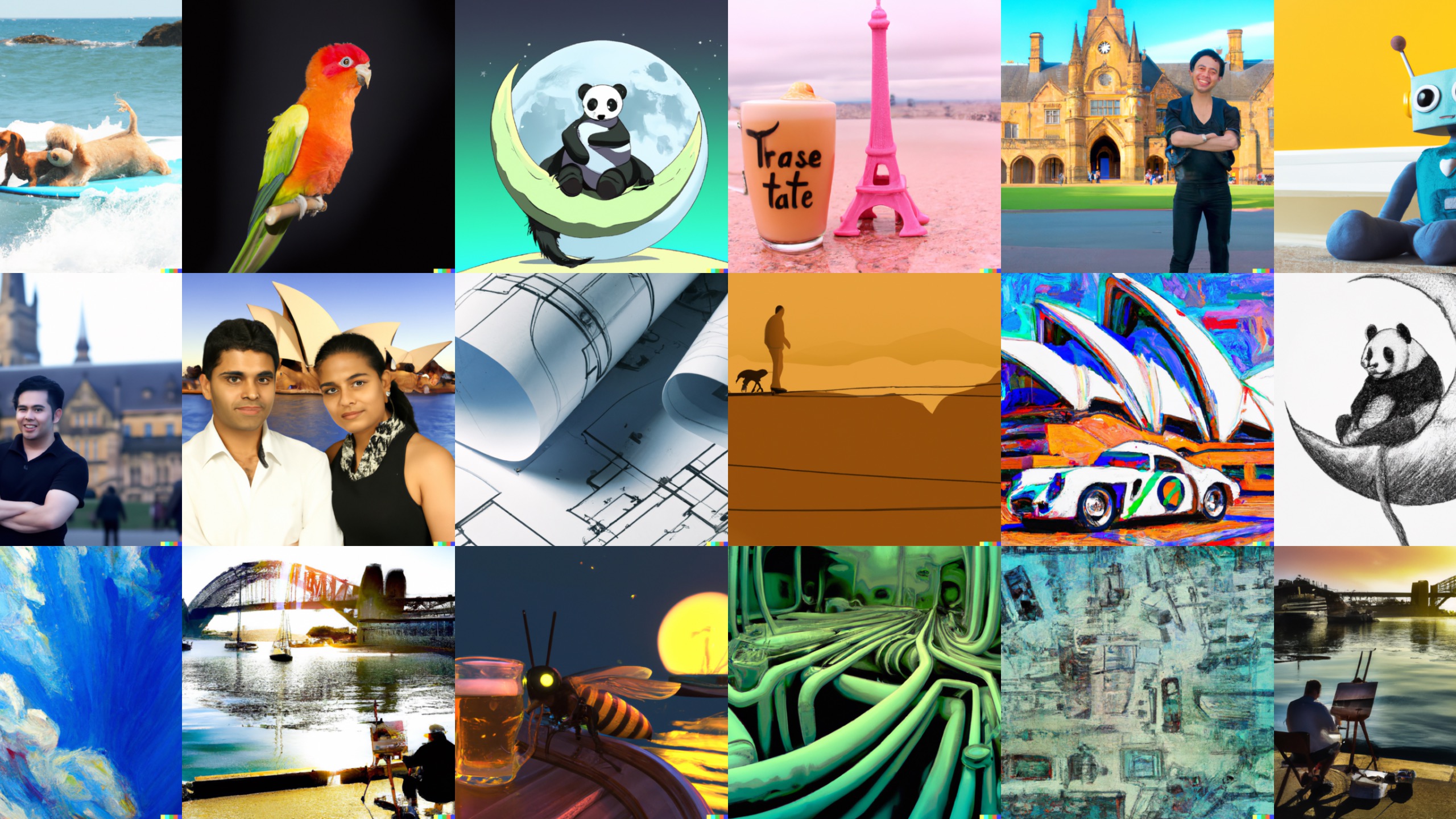

Sandra And if you want to see some good examples of this, you might want to have a look at the artwork for this episode of The Future, This Week. All of those images will have been created by one of these generative AI systems. And we'll include all the links in the show notes. If you've created your own art, send it to us, tweet it to us. We'd love to hear from you.

Kai And that's all we have time for today we're going back to playing with this weird and wonderful world of generative AI.

Sandra Thanks for listening.

Kai Thanks for listening.

Outro You've been listening to The Future, This Week from The University of Sydney Business School. Sandra Peter is the Director of Sydney Business Insights and Kai Riemer is Professor of Information Technology and Organisation. Connect with us on LinkedIn, Twitter, and WeChat. And follow, like, or leave us a rating wherever you get your podcasts. If you have any weird or wonderful topics for us to discuss, send them to sbi@sydney.edu.au.

Sandra And that is a disruptive event for the types of jobs and tasks that people do a disruptive event for well-established business models, not only on the creative arts, but internet business models, and also quite a disruptive moment for how we think about what art is.

Kai Let's start by pointing out that it does challenge in some fundamental way, the narrative around automation. I mean, we've put in the shownotes our Unlearn Project episode, where we showed that the idea that AI takes away the menial work, will leave us with better work, is not quite true, sometimes it makes our work harder. But it also doesn't seem to be true that AI only takes away the, you know, the stupid, menial tasks and humans are left with all the glorious creative work because now these systems actually go to the very heart of creative design work.

Sandra But here's where some people might go, 'oh well, you have to be very creative to think of a poodle on a surfboard in front of the Sydney Opera House'. So let's have a quick look at what people actually do with this, and not as a fun pastime thing before they start recording a podcast, but as part of their work or their creative process.

Kai For instance, Kevin Roose, in a recent New York Times article spoke to quite a few different people in the creative industries, about what they do with DALL-E and these kinds of technologies. And they spoke to a game designer, for example, who uses DALL-E to illustrate each new release in his online game. He would previously have to, you know, source images from a freelancer, which he says was not only costly for what is quite a niche game, but also would take him quite a bit of time in reviewing artists renditions, and he's now able to come up with really good solutions in a matter of minutes.

Sandra They've also changed how people do restoration, we'll include a link in the shownotes to how people have used AI tech to bring 19th century portraits back to life, adding colour, and then movement and emotions to the portraits. There's also a wonderful example of a movie showing a day in London in the 1930s that has been upgraded and coloured to better reflect what the day might have looked like in the 1930s.

Kai The New York Times also spoke to Patrick Claire, who's a filmmaker here in Sydney, he's worked on projects like Westworld. And he pointed out that oftentimes he needs to pitch ideas to a film studio, so he needs to have visual elements to showcase what he has in mind. And he would spend a lot of time going through image databases like Shutterstock to find the right image. And now he can use DALL-E to put a prompt in and iterate and find a picture that comes much closer to what he wants to actually show, than what you would normally find in databases of pre-existing pictures, where the technology now has a place in his creative workflow.

Sandra And even here on the SBI team, we've used images and creations by DALL-E and by Stable Diffusion to add artwork for some of the posts that we put up on our website. Steven Sommer has created a few of these works using various software to find a perfect match to what we were trying to illustrate, things like Data is not the new oil.

Megan And my birthday card.

Kai Yeah, and we also created a birthday card for Megan with DALL-E. But the point is really that you can illustrate abstract concepts by playing around with prompts. And you can do things fairly easily that would otherwise take a lot of work either in computer imaging or otherwise photoshoots. You can have dogs play poker; you can have animals surfing.

Sandra Things that might be not only impossible to make, or might be just very, very costly to access.

Kai Yeah. And now you can do this in a very easy and straightforward way. And it's not just fully coloured things, you can use this as a sketch tool. There's a service designer quoted in the same article, she creates very simple black and white drawings to illustrate, say process models or handbooks and things like that, improving the workflow. She and other people say that they might not necessarily at this stage include DALL-E renditions in their final works, but in the creative process of iterating forward to what the final work might look like, it has become a really useful tool. you

Sandra You could argue here that one might still need an eye for design and for the concept that goes into this. And in some cases, that is true. But often the design eye comes from you using a very particular style that might rely on someone else, either an artist or another designer, having thought deeply about these elements and where you place things on the canvas, and then importing that style just to add the design to the concept that you want to achieve. And increasingly, in programs like DALL-E and Stable Diffusion, there are many prompts that help along with that process, either by auto completing the prompt that you want to design or by using GPT-3 to actually add to what you're doing.

Kai There's also websites now where you can actually get ideas for what prompts would look like or copy and paste the prompt and then just customise it.

Sandra So a lot of scaffolding, a lot of help, and a lot of drawing on previous creative work.

Kai So let's talk about what this means for various actors in this industry. And the first one would be designers of various kinds.

Sandra For people who do art for instance, for covers of magazines or of books, on the one hand, it's a tool that they can employ to help them do the work and do the work more easily or generate more concept art for a client. But it can also mean losing clients who now choose to do it themselves. Because the platform enables them to do this at almost zero cost and with very little skill.

Kai There's already reports of designers who say that clients have jumped ship and using generative AI to do what they would normally charge money for. And even artists that charge a lot for prime artwork would engage in bread and butter, or more commercial design work, that is now threatened by the use of the systems.

Sandra Even we've used samples of this work, rather than going to a designer and say, 'Hey, can you do some concept work around this', we've used the software to try to generate some early prototypes to help our thinking along.

Kai But it also has implications for the places designers would previously have sourced images from such as Shutterstock, or similar image databases.

Sandra These are online marketplaces where you can type in the type of imagery that you want, you know, 'man standing in front of the University of Sydney building smiling, whilst wearing a dark jacket'.

Kai And then trying to find someone having taken a picture like that, uploaded it, and now selling it for royalty payments. So the Computerworld article is fairly explicit about the way in which these platforms might be disrupted. And we can already see that some are embracing the new technology. A TechCrunch article reports on a platform called Creative Fabrica, who give access for a monthly fee to millions of stickers, and artwork, and clipart, and all kinds of things that designers can use in their work. They are now allowing users to use their own generative AI engine to create images and then sell them on the platform.

Sandra And it's not clear where this will end up. Many companies are struggling with what stance to take. We've seen for instance, companies like Shutterstock, first banning all AI generated art from their website, and actively going after and removing any images generated by models such as DALL-E or Midjourney or Stable Diffusion. But now changing their stance and deciding for instance, that they're going to allow images created by DALL-E on their platform and they will try to find and ways to compensate the human artists on whose work the AI model has drawn to be able to generate these images.

Kai And so these platforms find themselves really at a crossroads where it's not quite clear where the industry will end up. Because you could argue that, you know, why would I want to licence or buy clipart that someone created with DALL-E, why not just use DALL-E to create what I want the clip out to look like? So I think there's a real soul searching going on with these platforms, and the question about where their niche will be, there's certainly a redefinition of what access to imagery and artwork will look like.

Sandra It's also not quite clear where artists will come down in this new world, not only in terms of compensation and what they might get from people using their...

Kai Their style, really.

Sandra Their style to begin with. But some artists have already expressed...

Kai Frustration, anger...

Sandra Frustration, anger at the fact that, you know, they might have spent years or decades of their life developing a particular style. And we're not talking about you know, that artists like Van Gogh, we're talking about current artists who are now seeing their work used to generate new images, and any compensation they might get is very, very small. This also raises questions about what type of work gets included in the training data sets that these models use.

Kai So just a reminder, these foundational models are built by taking in millions of billions of pictures that are often sourced from the internet, many of which represent copyrighted works, artworks, all kinds of imagery, to the extent that when someone puts a prompt to these generative AI systems, they can say, 'I want something in the style of Van Gogh, or Rembrandt' or popular contemporary artists who are still alive and who now find themselves in a weird world where they are trying to sell their original artworks. Whereas someone can now create images that look like they were painted in the same style.

Sandra So the way forward needs to include figuring out how to do consensual data collection. So only the works of people who have agreed to have their work, be used to train AI models should be included, and find ways to genuinely compensate the people whose work is being used as inspiration.

Kai None of which will be easy, because on the one hand DALL-E, for example, doesn't open up its training data set, while Stable Diffusion has made it searchable. So artists can actually see if their work were included, which opens the door for compensation for maybe opting out of having data included. So I think there's a lot of catching up to do with legislation and how we think about business models there.

Sandra But that shouldn't take away from the fact that this is an enormously creative moment for entertainment, for media for advertising, and really, for democratising that space for everyone.

Kai It's also a time suck. It's so much fun playing around with these systems.

Sandra And if you want to see some good examples of this, you might want to have a look at the artwork for this episode of The Future, This Week. All of those images will have been created by one of these generative AI systems. And we'll include all the links in the show notes. If you've created your own art, send it to us, tweet it to us. We'd love to hear from you.

Kai And that's all we have time for today we're going back to playing with this weird and wonderful world of generative AI.

Sandra Thanks for listening.

Kai Thanks for listening.

Outro You've been listening to The Future, This Week from The University of Sydney Business School. Sandra Peter is the Director of Sydney Business Insights and Kai Riemer is Professor of Information Technology and Organisation. Connect with us on LinkedIn, Twitter, and WeChat. And follow, like, or leave us a rating wherever you get your podcasts. If you have any weird or wonderful topics for us to discuss, send them to sbi@sydney.edu.au.

Close transcript