Clément Canonne

What maths can teach us about privacy

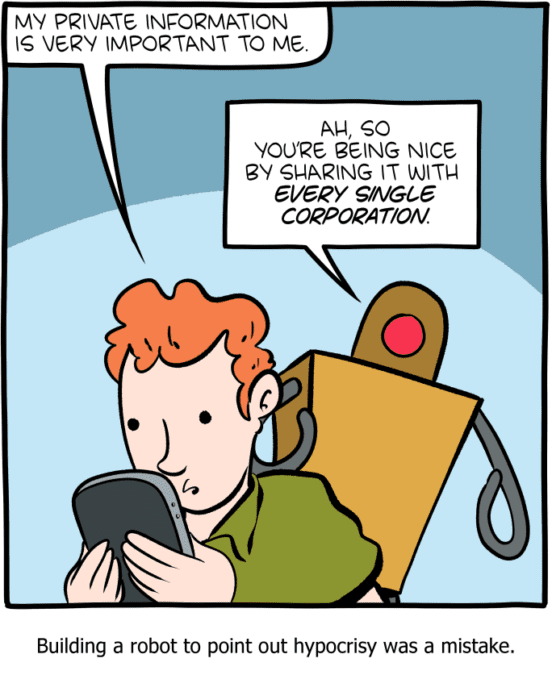

While ordering at my local café recently I discovered it no longer offers a physical menu. Instead I had to order via a QR code that redirected me to a third-party website. Going with the flow we placed our requests. But to proceed with the order, more details were demanded: my name, email, address and birthday. That’s perfectly common now, right?

Common, but shocking. What is this information used for? Where is it stored? For how long? And why is it needed in the first place? I research data privacy, and even to me this obnoxious but increasingly frequent demand barely registered at the time.

Future-proofing privacy using maths

Privacy is hard to define, so how do we know when it’s being violated? Demographers and statisticians have been grappling with this for decades. Imagine the watercooler chat at the Australian Bureau of Statistics around Census time: how can we gather and analyse sometimes sensitive data, to gain insights about the population without revealing too much about individuals? But there’s an unknowable challenge those gathering it should also ponder: how can we future-proof privacy in the personal data we capture today so that in years hence it is not violated when dissected by more powerful computers?

While this is not easy, maths (my discipline) does have a solution. The obvious way, anonymising the data and removing all information that looks potentially identifying, is not good enough. As long ago as 2017, researchers from Melbourne University were able to link back “deidentified” medical data to individual patients despite the assurances from the government about their privacy policies. Any speculation about “what could happen” or “how one could try and hack the data” cannot fully anticipate what could happen, and how one could actually try and hack the data. Our forever privacy cannot hinge on assumptions about future bad actors.

It may be because of their experience with everchanging technology and bad actors, then, that the most widely recognised approach to data privacy came from the security and cryptography community, where the rule of the game is to assume nothing and defend against adversaries with vastly more computational resources, money, and time than you. Cryptography researchers came up with a radical idea, a mathematical definition called differential privacy that puts a very specific requirement on the algorithm. Under this principle an algorithm must guarantee that whatever its output, when run on users’ data the result would not be much different if a single user were to be removed from the data set.

Rock-solid differential privacy guaranteed

An algorithm satisfying this mathematical requirement comes with a few rock-solid guarantees: firstly it is future-proof. A future supercomputer will not reveal more than what was originally intended. It is also robust to side information, i.e., prior knowledge a bad actor may have. It composes well: several simple privacy-preserving algorithms can be combined like Legos to obtain a more complicated algorithm, and the resulting algorithm will be privacy-preserving too. Today we have at our disposal algorithms satisfying this requirement for a large array of machine learning and data analysis tasks.

The cost of privacy

But for all its advantages, differential privacy remains a very stringent requirement, and comes at a cost (to the company). Ensuring privacy when analysing data does produce less accurate results. As an online customer, medical patient, or diner trying to order at a restaurant, this trade-off is invisible to me. But as a company monetising the data collected (such as, for instance, your supermarket), or as an analytics firm relying on swaths of users’ information, there is a real cost: the data or analytics they sell will now be less accurate, a bit more noisy — and so, less valuable. Which brings us to a crucial point: without a strong incentive such as legislation or heavy fines when their customers’ data is compromised, most companies will not take meaningful steps that could hurt their bottom line: we bear the cost of privacy breaches; they would bear the cost of ensuring privacy.

And even if entities handling our data were to use sound privacy-preserving algorithms we would still have the very same issue: why do I need to enter my birthday and contact information to order some appetisers? Differential privacy will help me against some of what could happen to all this data collected about me; but it will not mitigate the risks of a data breach, or of my data being used in a non-private algorithm, or many other things which could happen. Threats to privacy can come in many forms, and differential privacy only addresses some of them.

There is still much we do not know, and much we need to push for in terms of laws and technological advances if we hope to keep our personal information ours in the future. For now, however, what may be the most important action we as individuals could take is to ask for such laws from our elected officials. And, when asked by an app for our mother’s maiden name while ordering a side of chips, instead do the old-fashioned thing, call a waiter.

Image: Sergey Zolkin

Clément Canonne is an ARC DECRA Fellow and a Lecturer in the School of Computer Science of the University of Sydney. His research focuses on understanding the fundamental limits of machine learning and developing principled approaches to data privacy.

Share

We believe in open and honest access to knowledge. We use a Creative Commons Attribution NoDerivatives licence for our articles and podcasts, so you can republish them for free, online or in print.